(Edited on 20.09.2020)

In this post I’ll try to clarify some dark corners of character encoding process in C++, going out from the source file, over compilation to the final output. After many years dealing with C++ I still have an impression that this topic is making difficulties, especially to the new C++ programmers (at least regarding to the same stack-overflow questions popping out over and over again).

I will not talk about choosing the string encoding for your project or about string manipulation functions. That’s the topic for an other post.

What I will talk about is, how compilers are dealing with different string encoding’s, how they interpret string literals in the source file, how they store those string literals in the final executable and why those bytes are sometimes not displayed or stored in the database the way you would expect it to. For non-beginner programmers these process should be pretty clear, but for the new programmers I think it will bring some of those “aha” effects after reading this post.

I assume you are C++ programmer and I assume that you have the basic knowledge about character sets and character set encoding, and the difference between those two. You should know about code pages, about unicode and about multibyte and fixed-width character encodings. You should know about UTF-8, UTF-16, UTF-32. You should know about Byte-Order-Marks (BOM). You should know about Little-Endian /and Big-Endian Byte Orderings. You should know about C++ string literal prefixes: u8, u, U.

If you are missing something about, then please read these links before going any further:

If you are ready, then we can start…

We will examine following simple main function:

int main()

{

auto str = "äÄ"; // narrow multibyte string literal

auto u8str = u8"äÄ"; // UTF-8 encoded string literal

auto u16str = u"äÄ"; // UTF-16 encoded string literal

auto u32str = U"äÄ"; // UTF-32 encoded string literal

}

Notice that I skipped the narrow wide character literals L”…”, because I want to keep it straight for know and don’t want to bring additional overhead at the moment. I will say a few words about it and the end.

It contains only 4 string declarations with different string literals, each with the same non-ascii characters: ä and Ä (German’s “Umlaute”). Those characters have different numeric representations (I’m using hexadecimal) in different character sets and character encodings:

| Character set | Character Encoding | ä | Ä |

| Unicode | UTF8 | C3 A4 | C3 84 |

| UTF16 | 00 E4 | 00 C4 | |

| UTF32 | 00 00 00 E4 | 00 00 00 C4 | |

| Code Page 1252 | E4 | C4 | |

| Code Page 437 | 84 | 8E |

1. Step – Source File Encoding

When you edit a source file, your text editor (or ide) is storing those characters you type with some predefined encoding as a source text file. For ex. I have configured my VIM to store my source files with UTF8 encoding. So I would expect all 4 string literals above to be stored according the UTF8 encoding for ä=(C3 A4) and Ä=(C3 84) as the following byte sequence on disk:

C3 A4 C3 84

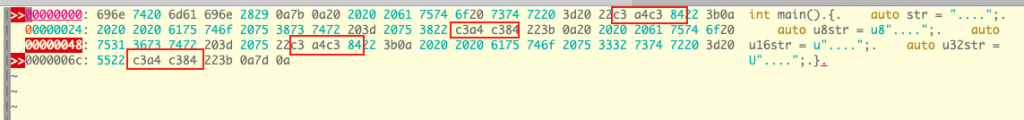

We can easily verify this by looking at a hexadecimal representation of the source file. In VIM for example via :%!xxd. In my case byte representation of main.cpp encoded in UTF-8 looks like this:

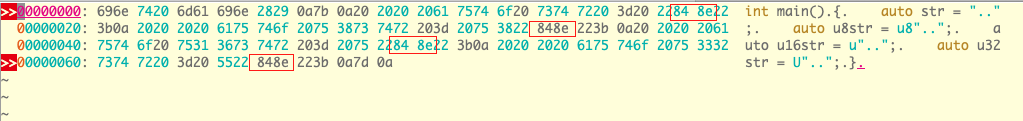

I have marked the content of the 4 string literals in main.cpp source file – as expected they are all UTF8 encoded as äÄ = C3 A4 C3 84

Now we’ll try to change the source file encoding to some other encoding, for example UTF-16. Now here there is a little pitfall: because UTF-16 is using 2 bytes grouping for its encoding, we need to know/define/specify the order of those 2 bytes when storing them to disk (as you know there are little-endian and big-endian byte orderings). For example we can store ä (= 00 E4 in UTF-16 encoding) as:

| 00 E4 | Big-Endian: High Order Byte First |

| E4 00 | Little-Endian: Low Order Byte First |

On Unix/Linux iconv utility can be used to convert text files from one encoding to the other. For ex. to convert main.cpp from UTF-8 encoding to UTF-16 Little-Endian encoding and save it as main_utf16_le.cpp I’m using on my Mac following command:

iconv -f UTF-8 -t UTF-16LE main.cpp > main_utf16_le.cpp

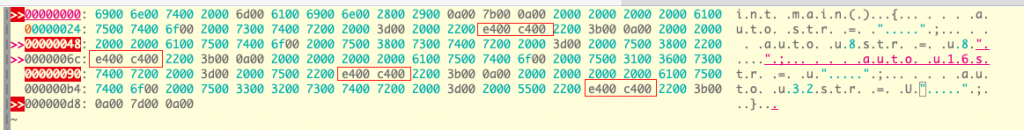

If we now look on the binary hexadecimal representation of main_utf16_le.cpp, we should see that the sequence äÄ is correctly UTF-16 (Little Endian) encoded as E4 00 C4 00 in all 4 string literals:

Let’s try a few more different encodings:

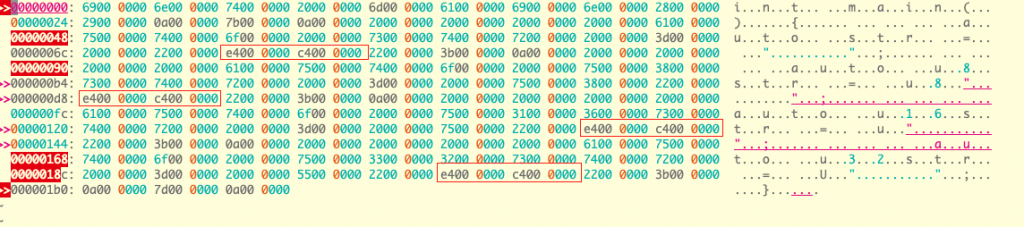

UTF-32 Little Endian: äÄ = E4 00 00 00 C4 00 00 00

iconv -f UTF-8 -t UTF-32LE main.cpp > main_utf32_le.cpp

Windows Code Page 1252: äÄ = E4 C4

iconv -f UTF-8 -t CP1252 main.cpp > main_cp1252.cpp

Code Page 437: äÄ = 84 8E

iconv -f UTF-8 -t CP437 main.cpp > main_cp437.cpp

By now it should be pretty clear to you how Non-ASCII are encoded and stored when you save a source file.

By the way: According to Standard Specification 2.2, only ASCII characters in source file are standard-conform. So:

TIP:

Use only ASCII characters in source files. Export any Non-ASCII string literals in resource files or alike.

2. Step: Make sure that compiler understands Source File Encoding

Before starting compilation at all, the compiler needs to read the source file first. To read the source file correctly it needs to know and use the correct character set and encoding you used for writing the source file. This is specifically important if you have some Non-ASCII characters in the source file.

If you are using IDE for editing and compiling the code, the IDE will normally just do the right thing. It will save the source files with some default predefined encoding (assumed that you don’t mess around with default settings) and the compiler will correctly read those sources.

Otherwise by using custom editors or mixing files from different sources, the things may look different.

The compilers normally try to check the BOM of a file, to see if its some kind of Unicode encoding (UTF-8, UTF-16 or UTF-32). If it can’t find any BOM, then it will probably assume the current system encoding and just use it. And this can be an issue. For example on Windows by having UTF-8 encoded sources without BOM, the Visual Studio will most probably assume Code Page 1252. If you have only ASCII characters in the source file, then this will be no problem, because the encoded ASCII characters are same for UTF-8 and 1252 encodings. Otherwise the Non-ASCII characters will just be interpreted wrong. We will see later how this looks like in practice.

To help the compiler in this special cases und tell it what the encoding of a source file really is, so it can interpret it correctly, there are compiler-specific compile flags for this purpose, for example:

| Compiler | Compiler Flags for Specifying Source File Encoding |

| Visual C++ (cl.exe) | /source-charset:<encoding name> |

| GCC, Clang | -finput-charset=<encoding name> |

3. Step: Executable Character Set/Encoding

After reading and compiling the source file, the compiler needs to store those string literals in the final executable.

What is the encoding of the compiled string literals in the executable ?

Well, it depends on compiler and the kind of string literal. For ex. GCC on Linux/Unix will normally store narrow string literals as UTF-8, whereas Visual C++ on Windows will normally store it as Code Page 1252. But this is only true for narrow string literals. Remember, since C++11 there are special prefixes for string literals (see link above). So for example one can specify in the source file already how the string literal should be encoded within executable, for example:

| “äÄ” | Read äÄ from the source file (in whatever encoding it is) and store it in default character set, whatever it is. This is compiler specific. For ex. Visual C++ on Windows will store it probably encoded in Code Page 1252 (E4 C4), where as Gcc/Clang on Linux/Unix will store it as UTF-8 (C3 A4 C3 84). | |

| u8″äÄ” | means: | Read äÄ from the source file (in whatever encoding it is), find the corresponding Unicode Code Points for those characters, encode it as UTF-8 and store in the executable |

| u”äÄ” | means: | Read äÄ from the source file (in whatever encoding it is), find the corresponding Unicode Code Points for those characters, encode it as UTF-16 and store in the executable (the endianess of the stored byte-doublets will match the machine endianess) |

| U”äÄ” | means: | Read äÄ from the source file (in whatever encoding it is), find the corresponding Unicode Code Points for those characters, encode it as UTF-32 and store in the executable (the endianess of stored byte-quartets will match the machine endianess) |

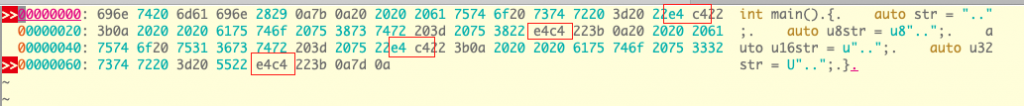

MSVC Compiler with /source-charset:utf-8 compiler flag

MSVC Compiler with /source-charset:utf-8 /execution-charset:utf-8 compiler flags

We see on this example that the narrow string literal got encoded in the resulting executable depending on the compiler flag (in this case /execution-charset:utf-8), but the other string literals with provided encoding information (u8, u, U) stay unaffected.